|

SUMMARY:

You run a marketing test. You get a lift. Or a loss. Or maybe it was inconclusive. But what’s next? Read on for a step-by-step approach packed with real-world examples and accompanied by an AI workflow to do it with you. |

Action Box: AI workflow can help do this with you

MeclabsAI can do it with you. Try the multi-agent workflow (MeclabsAI is MarketingSherpa’s parent company).

For this article, we’re starting in the middle of the story. I’ve made the assumption that you’ve already tested something, in some way.

Before you run your next test, you want to evaluate your previous test. Did you prove or disprove your hypothesis? What worked and what didn’t…and why? Which messages resonated? Which fell flat? What customer insights can you gain from this?

Much testing is quantitative, so to shed some light on what the numerical results mean you may want to gather customer feedback or look at behavioral data other than the main KPI in the test. Focus on pain points and customer motivations.

You could use a table to list your performance metrics and insights. It might show that the overall conversion rate was down on the page and the bounce rate was up, indicating a misalignment with customer needs (or perhaps just for a specific customer segment). But the clickthrough rate from the ads to the page increased, showing promise in the value-oriented messages you tested.

This scenario would indicate that perhaps you did not carry through the value you communicated from the ad onto the landing page, and might want to try a landing page test focused on that.

Here’s an example of a company that looked beyond the numbers to come up with test ideas.

“We were running Facebook ads, but after a few months, the click-through rate stalled at 0.9%,” said Kevin Wasonga, growth and partnerships lead, PaystubHero.

The team went back through customer support chat logs and support tickets to see what people actually asked before signing up. Certain phrases kept popping up, like ‘proof of income for my new job’ and ‘how to create a paystub fast.’

The team previously thought most users were creating paystubs for employees, but they realized that many employees were creating paystubs themselves. That completely changed the messaging, because before, they were speaking only to small business owners.

The team turned these insights into a headline and reworked the ad copy to focus on speed (‘Download in minutes, ready for HR’). The next A/B test showed a CTR jump to 1.6%, and cost per sign-up dropped 27% in two weeks.

While the primary metric the team usually uses for its tests is conversion rate, they also look at bounce rate and time on page. Sometimes a message might not increase conversions instantly, but it keeps people engaged longer which can be a win in a longer funnel.

After running a test, if the result is flat or slightly positive, they will make a tweak for their next test. Sometimes adjust wording or placement. If it’s a big loss, they usually kill it and try a fresh angle. “We keep a shared Google Sheet with every test, what we changed, and the results. From this, new team members don’t waste time repeating failed tests,” Wasonga said.

And as in the above test, direct customer interactions help inform their tests. “Surveys can be biased, but when a customer is in a live chat, they’re telling you exactly what’s confusing them or slowing them down,” he said. “We’ll take a recurring customer question and ask, ‘If we address this directly in our headline or CTA, will conversions go up?’ That becomes our hypothesis.”

For example, if customers keep asking ‘Is this legal?’ the team might test a trust badge or compliance statement at the top of the page.

“Our biggest win was adding a short ‘Why people use our generator’ section. That came from a common chat question: ‘Why should I use you instead of [competitor]?’ That little section boosted conversions,” Wasonga explained.

Once you understand what you learned about the customer last time, set clear targets for your next test. Decide what you want to improve.

For example, if in the last test you were able to increase engagement with an ad to get more people to a landing page, the next test could aim to improve conversion on that landing page. Align these goals with not only your business priorities, but also customer needs. In this case, the test could aim to not only increase conversion (business goal) by making the offer clearer to the three key prospect types your business serves (customer needs).

Now hypothesize outcomes. For example, if you were to use the Meclabs Scientific Messaging Hypothesis (Meclabs is MarketingSherpa’s parent company), you would frame your hypothesis using the structure:

Keep the statement focused and measurable. Here’s an example:

The team at Indy Roof & Restoration takes an iterative approach to its testing to continually optimize their landing pages. They take a high-performing landing page as the control, and test a treatment against it. If the new variant treatment loses, they drop it, and their next test is another variant against the original control. If the variant wins, it becomes the new control, and their next test is to test new variants against it.

With this approach, the team has been able to increase their conversion rate 52.55% since they began testing on August 12, 2024.

Let’s look at one of their testing cycles. First, the team tested a hypothesis of focusing more on customer motivation. “This was a landing page test we conducted for all Google Paid Search clicks going to our Unbounce landing page,” said Nick Segar, director of marketing, Indy Roof & Restoration.

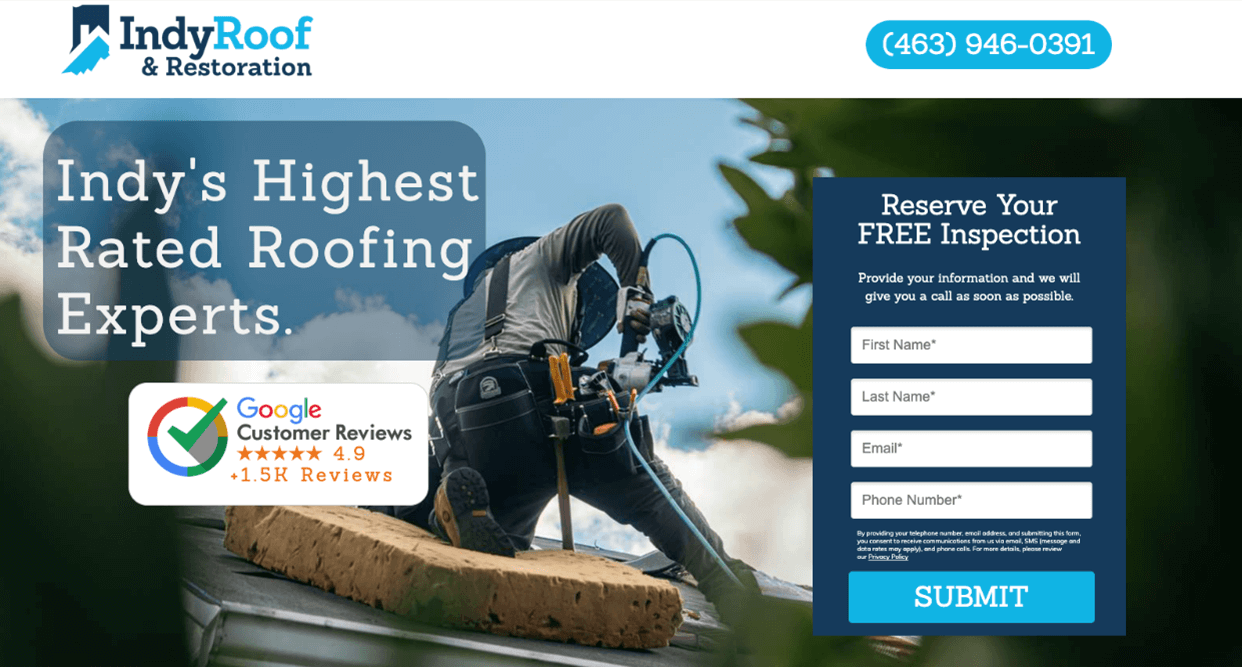

The control’s headline touted the company (‘Indy’s Highest Rated Roofing Experts.’) and the form focused on collecting information from the customer.

Creative Sample: Control

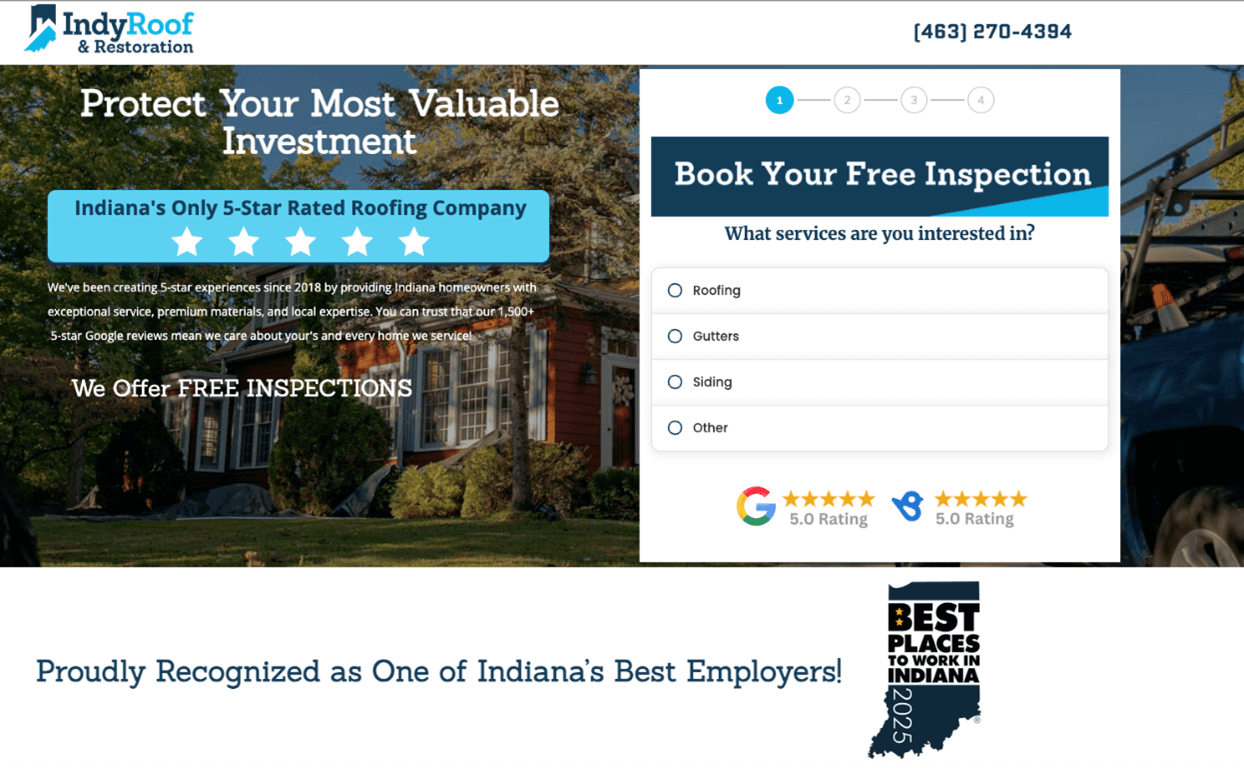

In the treatment, the headline focused on the value to the customer (‘Protect Your Most Valuable Investment’) and the form first asked what services they were interested in (roofing, gutter, siding, or other) before getting any contact info.

Creative Sample: Treatment

The treatment had a 6.45% conversion rate – 31% higher than the control.

The team also learned the new form better qualified the customer, leading to a higher sales conversion rate, by giving the consumer the chance to try state ‘why’ they are reaching out.

So now their objective for their next test is to find ways to better tap into customer motivation, and they have a hypothesis that recognizing the keywords searched and dynamically routing that consumer to the landing page that is best optimized for their use case will further increase conversion. “The next thing we are testing is utilizing Unbounce's 'Smart Traffic' to route visitors to the page variant where they're most likely to convert,” Segar explained.

Once you have an objective and hypothesis for your next test, brainstorm alternatives to your control.

Use insights from your data review and qualitative customer and prospect feedback. Craft alternative headlines and key message variations that speak directly to your audience.

Apply a value proposition lens. How can you use art, copy, and other elements to highlight clarity, credibility, and distinct value? Avoid fluff – get straight to the benefit.

One way to brainstorm is on a whiteboard. Create a simple flowchart linking customer needs to your proposed messaging, visuals, and other elements.

When you’re doing so, it can help to print out the previous step and tape it to the wall in front of the whiteboard to remind the team what the prospect saw right before getting to the step you’re testing.

Look, it’s hard to write one good headline for a page. Design one good image. I get it.

And then if you’re testing, you need at least two. So that’s even harder.

But in a perfect world, you’ll come up with many options (MeclabsAI can help), not just the two you can test right away.

When you have more good ideas for a hypothesis than you can test, you want to bring your golden gut into the test planning and prioritize based on the impact you think they will have.

Rank your messages. Use a decision matrix to evaluate potential impact. Consider factors like customer motivation, perceived value, and how the message lowers resistance.

Have your team debate the possibilities and then vote on the variants they think are most likely to succeed. Remember, you can always test other variants in future tests, you are just prioritizing for what could be most effective right now.

And I know when we’re talking about something like experimentation this all sounds very boring – but make it fun. What you’re really doing is tapping into your curiosity for how you can best serve a customer, and then your ideal customer will tell you based on the results of your test if you were right or need to try something else.

If you’re the team leader, this is also a chance to instill a culture of curiosity and contribution from everyone on the team. Be gracious if you idea loses and celebrate the winner. Here’s what Susanne Rodriguez, chief marketing officer, Auvik, told me in B2B Revenue Marketing: Be an uncertainty killer (podcast episode #120):

“I love having my opinion challenged. And so when someone proves me wrong, like this example with Tara, I really encourage them to share their victory with the team. So we can create this team culture that feels empowered to take chances. Because, you know, we talked about earlier, I'm really direct and this whole concept of providing clarity and being open and honest.

So just because I'm expressing my opinion where I might not like something, I don't want the team to feel like it's shot down and we can’t go ahead and try it. So we celebrate what we call, ‘proving Suzanne wrong’ when we have these instances where I like being challenged and testing new things. And we really celebrate when, I guess I'm proven wrong.”

Also when prioritizing, remember that while we as marketers tend to focus on art and copy, sometimes the most impactful element to test isn’t a headline or hero image. Like in our next example.

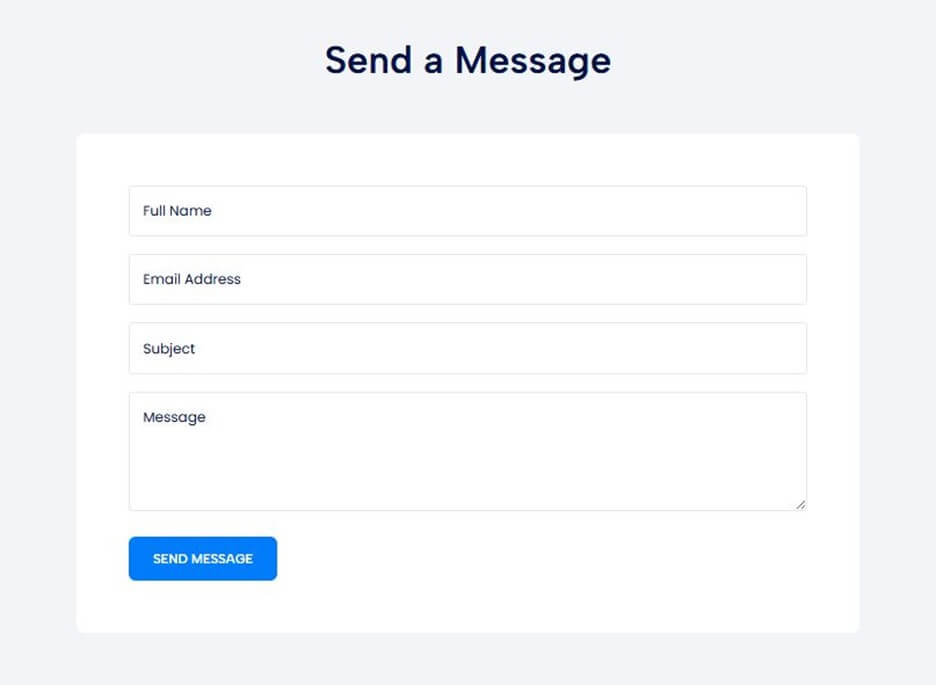

A landing page for private drivers in Mexico City had a generic, ‘contact us for a quote’ form. Prospective riders needed to enter their trip information in a blank box and submit the form, waiting for a response.

Creative Sample: ‘Send a Message’ generic contact form

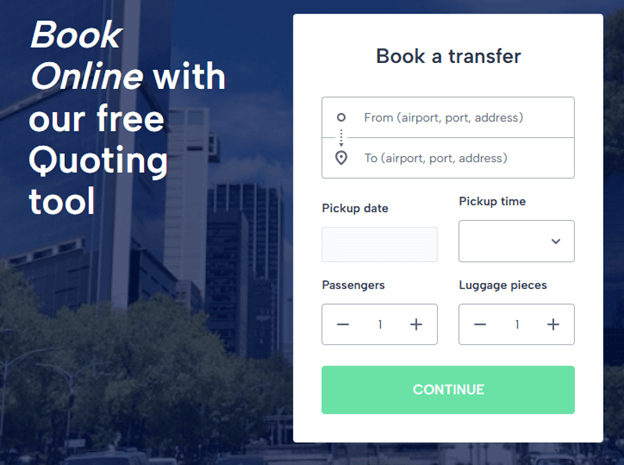

The team replaced this form with an instant quote form that asked for key variables about the trip:

The price was generated instantly for the prospect, they no longer had to wait for a followup.

Creative Sample: ‘Book a transfer’ detailed, instant quote form

While the team doesn’t have specific analytics to compare performance, they estimate conversions have roughly doubled based on operational observations.

“It built instant trust – we were upfront on price from first click,” said Martin Weidemann, owner, Mexico City Private Driver.

Once you’ve made the decisions in the previous steps, it’s time to actually run the test. So let’s talk testing.

The example in Step #4 is not a controlled experiment. And for this article I’m using the broadest definition of the word test – namely, try something else out – because for many smaller businesses they cannot run true, scientifically valid experiments.

However, I’m including this step for the businesses that can run controlled tests. And even for small businesses, I think it helps to understand the elements of a statistically valid experiment, so they can move toward it the best they are able to.

A controlled test would account for the following validity factors to ensure that the results you are observing are in fact based on the change you made between the control and the treatment, and not for some other reason. I go deeper into validity in Online Marketing Tests: How do you know you’re really learning anything?, but at a high level, here are the things to look out for:

You also want to keep tests focused so you can pinpoint what changes actually make a difference in the results. If you just change a bunch of things and get a lift, that feels great in the short term, but what did you really learn to serve the customer better? How will those results affect your next step?

“It's important to design your test so that it only tests one variable at a time,” said Erica Korman, consultant, Emmet Marketing Consulting.

For example, Korman was working with a B2B cybersecurity company with $100M in annual revenue that was struggling with webinar attendance despite having a substantial subscriber base. Their standard email campaigns promoting cybersecurity training webinars used conventional urgency messaging like 'Last chance to register' in subject lines, resulting in disappointing 18% open rates and below-target registration numbers.

She hypothesized that FOMO-driven messaging would resonate more strongly with security professionals than traditional deadline pressure.

The team designed a controlled A/B test isolating only the subject line variable: 'Last chance to register' versus 'Don't miss the deadline!' Everything else remained identical – sender, send time, email body content, and call-to-action buttons.

“Group A gets A subject line, and group B gets B subject line but the content inside both emails are identical. You want to also schedule the emails at the exact same time so there's no variance around one timing being better than the other,” she said. “You also need to ensure your tests are reaching statistical significance. You can't run an A/B test on 10 people and look at the results as telling you they are repeatable and scalable. You need a statistically significant pool size to begin with.”

The FOMO-focused subject line 'Don't miss the deadline!' drove a 23% increase in webinar registrations and boosted email open rates from 18% to 22%. For a company of this scale, this single subject line change translated to hundreds of additional qualified prospects attending their webinars each quarter.

Since this was a controlled experiment, where only one variable changed, the team was able to garner a broader insight from the test – security professionals, who deal with consequences of missed threats daily, responded more strongly to loss-aversion messaging than time-based urgency.

“The first test was we can play off people's FOMO, will that get them to action an email? Yes! Ok so we see that people will take action if they feel like they're missing out on something ... why? Well, they feel like they may be missing an opportunity... ok that's another test... if we phrase it as an opportunity and test opportunity types, will that make a difference? Every test you answer a question. Then you can make some deductions from each test... but then you must test the deductions,” Korman said.

As Korman mentions above, you want to keep asking ‘why?’ when you get test results.

Review the results and compare your test outcomes against your objectives. Focus on conversion and engagement metrics to tell you the what happened, but don’t hesitate to solicit customer feedback to better understand the why.

And then… test again. Iterate based on these new customer discoveries. Tweak your hypotheses and message elements based on what the data shows. Keep refining your strategy – it’s an ongoing process. The more you learn about customers, the more you will want to know. Plus, your market are always changing due to macroeconomic (global booms and busts) and microeconomic (you have new competitors) factors.

The team at Davincified conducted an A/B test on copy to first-time customers:

They got the original idea to test emotion from listening to customers, and the emotional treatment did in fact achieve higher conversion. The team also plans to use customer language to inform the messaging strategy in its future tests.

“We listen to how customers are discussing the experience. The best copy is written there, not through brainstorming by oneself, but by listening to what and how people talk,” explained Jessie Brooks, product manager, Davincified.

As you continue to test and learn, record every step. Maintain detailed logs of test hypotheses, metrics, and results (you’re welcome to use the free test discovery log we’ve shared before).

This documentation can help you create a feedback loop – share your learnings with your team, which then inform team discussions, and ultimately give you constructive feedback to fine-tune your messaging and inform future tests.

Turn your knowledge assets into competitive advantage – MarketingSherpa has teamed up with parent company MeclabsAI to produce a research study. We are granting ten AI engineering vouchers worth $7,500 each to eligible companies. The goal is to test this hypothesis: Companies can rapidly build and scale high-value products and solutions by properly combining AI agents with Human experts.

Marketing Promotion Strategies: These 3 message levers moved people to click, link, and refer

Get Better Business Results With a Skillfully Applied Customer-first Marketing Strategy

The customer-first approach of MarketingSherpa’s agency services can help you build the most effective strategy to serve customers and improve results, and then implement it across every customer touchpoint.

Get More Info >MECLABS AI

Get headlines, value prop, competitive analysis, and more.

Use the AI for FREE (for now) >Marketer Vs Machine

Marketer Vs Machine: We need to train the marketer to train the machine.

Watch Now >Live, Interactive Event

Join Flint McGlaughlin for Design Your Offer on May 22nd at 1 pm ET. You’ll learn proven strategies that drive real business results.

Get Your Scholarship >Free Marketing Course

Become a Marketer-Philosopher: Create and optimize high-converting webpages (with this free online marketing course)

See Course >Project and Ideas Pitch Template

A free template to help you win approval for your proposed projects and campaigns

Get the Template >Six Quick CTA checklists

These CTA checklists are specifically designed for your team — something practical to hold up against your CTAs to help the time-pressed marketer quickly consider the customer psychology of your “asks” and how you can improve them.

Get the Checklists >Infographic: How to Create a Model of Your Customer’s Mind

You need a repeatable methodology focused on building your organization’s customer wisdom throughout your campaigns and websites. This infographic can get you started.

Get the Infographic >Infographic: 21 Psychological Elements that Power Effective Web Design

To build an effective page from scratch, you need to begin with the psychology of your customer. This infographic can get you started.

Get the Infographic >Receive the latest case studies and data on email, lead gen, and social media along with MarketingSherpa updates and promotions.